Pro camera gear has been using this for a few years now and seeing this stuff in audio gear is a huge plus.

Being the first standalone to create stems on the fly without a PC doesn’t mean anything in itself.

If the rendering quality of the stems produced independently is just average, this is of no interest. On the other hand, if the rendering quality is at least as clean as the 2.0 virtual DJ stems, then that’s a big yes.

And what about rendering time on the fly? If the device takes 2 minutes to render the stems in high quality for a song that lasts 2 minutes 40, it will be complicated to use in a real situation.

I’m not a big fan of this vertical layout screen format. But if Denon releases a successor to the Prime 2 with only two channels with a classic horizontal format screen of at least 8 inches, with 7 inch jog wheels, with effects triggers and the functionality of this system one, even without a motorized turntable but with external Line/phono inputs, then I might be tempted.

But I’m talking about a real product from the prime range, with the same manufacturing quality, not the cheap manufacturing quality of the sc live series.

This could be an ideal unit for mobile events

Live MPCs are mainly intended for creating/cutting/isolating samples, and for this type of use where you deal with parts shorter than 30 seconds, this choice of hardware chip can be relevant and adapted to the intended use.

But for a DJ product intended to process complete tracks lasting several minutes, the RK3588 is not powerful enough to perform this rendering quickly, even when combining AI and onboard GPU modules. This is not a relevant choice of chip suitable for this specific use case.

The RAM only serves as temporary storage space for the stems, the larger it is, the longer you can store stems. But it has no impact on the speed of processing which totally depends on the SoC.

Changed title a bit ![]()

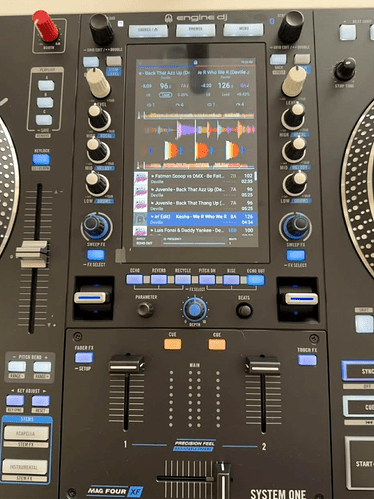

No one wants to pixel peep and speculate on any features? Like the dedicated acapella/instrumental stems buttons, that are also labeled Stems FX? Or the silent cue function, or the dedicated buttons for Fader FX, Touch FX and some global functions like Mixer / EQ, Record and Quantize? And are those screens above the performance pads?

Well we all already know what all these functions are used for and they already come from other Rane/Denon products. No real surprises or game-changing features here.

Furthermore, there is no guarantee at this stage that all the functions can be used in stand-alone mode, in particular the functions linked to stems. Some of them could perhaps only have an effect in controller mode with serato.

But it does. DJ needs aren’t using 128ch of bi-directional audio and efx, a DJ’s needs are way less powerful than you think. Plus the new Rockchip has a edge AI processing chip, combine that with the power of the GFX processor and a cloud service (music LLM with stems information) then it’s absolutely doable. I’ll make an open friendly bet that realtime stems on a DJ AIO will be here in less than 6mo.

Either you are very optimistic, or you dream on your feet!

The RK3588 is a cheap CPU worth less than $60 whose technology is 5 years old, not an M4. A cutting-edge AI chip? With barely 10 TOPS in AI performance while it takes at least 30 to calculate stems in real time for complete tracks in less than a minute. As for the embedded GPU, there is a difference between processing and decoding of video streams and 3D calculation processing.

And bad luck for you, it is indeed the 3D part which offers the processing power necessary for processing stem algorithms, not the part which takes care of video processing.

So an rk3588 can do a whole bunch of 8K processing on AV1 codecs or whatever, its GPU is mediocre in terms of raw calculations. Processing video is child’s play for any current soc, processing high quality stems in real time is a completely different story.

All of this has already been covered.

Neither. I just look at the bottom of the toolbox to find the right tool. inMusic has the tools. Have you looked into non-cloud open source AI audio separation?

I didn’t say wholly onboard processing. Did I. All that DJ library info scraped is going somewhere ![]()

Well silent cue and stems FX would be new in a standalone device. The latter is labeled so prominently that I doubt it will only work with Serato.

Of course it’s possible that this is part of Engine OS 5.0 and that some functionality will not come to older InMusic units.

Yes, it has. Thanks for all of your technical details / reality check @Gaian. Here

And, this thread is particularly pertinent to this discussion.

Current stem separation algorithms, especially those requiring processing with precise and high quality separation, are heavy and complex algorithms and require infinitely greater computing power than any video decoding processing.

To achieve a convincing result for DJ use, i.e. preserving a high quality of separation while maintaining a fast processing time, you basically have two options:

-

Either you have an AI calculation unit dedicated to this task, in which case this unit must have processing power of at least 30 TOPS.

-

Either you do not have a dedicated AI calculation unit and in this case it is the part dedicated to 3D calculations of the GPU which takes care of this task. And for this GPU to be able to handle this task quickly it must at least have computing power equivalent to a GTX 1060, i.e. around 5 TFLOPS

Below these values everything will only be compromised, either you will have to lower the separation quality, to maintain an acceptable processing speed, or you maintain a high separation quality and in this case the processing time is excessively long (this is what happens on the latest MPC Live)

The RK3588 has a 10 TOPS AI unit and an onboard GPU whose raw power is 0.6 TFLOPS FP32.

The numbers are the numbers, you can’t make an omelette with hard boiled eggs. So if they manage to make stems in real time with this hardware, there will necessarily be concessions or compromises made somewhere. Either on the quality of the rendering, or on the necessary processing time.

As for AI calculations deported to cloud computing, that could be a solution but with a lot of constraints in return (obligation of a permanent internet connection, etc…)

New on a standalone device yes, but not new in absolute terms, these are only functions taken from products such as the Rane performer / Rane One MkII are adapted to Engine OS.

For the moment I think we must remain cautious and not speculate too much because there are still many areas of vagueness and ambiguity on the real performances/possibilities of this product in standalone mode, in particular around the processing of stems.

The rest of the features (effects, etc.) are easy to handle for an rk3588.

Wrong. Do you know how Shazam works? It’s just looking data points in a music library, not audio processing.

Stems are data points processing not audio processing, it’s taking the data points and telling a multi-band EQ to knock back or boost frequencies, this isn’t a new process it’s just that a human isn’t doing it manually.

AI isn’t intelligence, it’s a buzzword, it should actually be called ML (Machine Learning) but that doesn’t sell products or convince people to build data centers. You’re hinging things on horse power, I’m hinging it on coding and processes. The difference between those two is just like cars, how fast can I get to 60mph when I stomp on the gas opposed to what’s my gear & torque ratio. So in your opinion you need American muscle to till a field but wildly and inefficient, whereas I saying a Unimog will get the job done better, faster and cleaner. Both do the same job but at what expense.

Please don’t compare the shazam algorithm with the stems algorithms. It’s actually much more complex than boosting/cutting using multiband EQ. It is actually about extraction/isolation of frequencies in relation to their position in a stereo space. Because you know that in an audio track you can have frequencies that overlap/mix on top of each other. Certain frequencies of a voice which merge with certain frequencies of a lead or chords for example.

And the whole job of these algorithms is precisely to dissociate these frequencies in such a way that there is 1) no audio residue from another stem on a similar frequency and 2) no frequencies are eaten or degraded during the process of extracting another stem. It’s much more complex than working with multiband equalization.

We are almost at the same level of complexity as algorithms used in 3D games such as Ray Reconstruction which is used to simulate light radiation effects.

In your opinion, why do Serato and Virtual DJ require powerful GPUs + Intel I5/Ryzen 5 or M1–M4 type processors on Apple platforms for stems extraction processes with 16 GB of Ram if a $60 Rk3588 could do the same job with a multiband EQ? For the pleasure of annoying the world perhaps?

And don’t come and tell me that it’s because Windows uses a lot of resources and is not optimized. While Engine on a Linux kernel would be better. Even with the best optimization in the world, you cannot expect a $60 share to offer you the same performance and perform miracles as a $400 share.

You have no idea what you’re talking about.

The problem with downloading pre-analyzed stem files for tracks from an off-site storage solution, hosted by InMusic, comes down to licensing. There are very specific license agreements needed to host music for the purposes of DJ Streaming. It is possible that InMusic partnered with an existing streaming partner for this type of feature, but InMusic on their own doesn’t have the capital needed to invest in their own solution, while still meeting the royalty requirements of the big four.

excellent job ,able to switch to serato to engine dj without any computer mode ,like prime series