Continued, part 3.

The task was to simultaneously perform the necessary functions with one MCU computing core, digitize the analog signal, extract the melodic and rhythmic parts in it, convert it into data for visualization, and control the dynamics of light.

The visualization problem lies in the fact that one must first understand what the light sources will be. I, according to my capabilities, can afford to experiment only on LEDs with built-in drivers. Those. these are the same type of light sources with the same parameters and characteristics for all. Moreover, their location is always fixed, which initially simplified the task. In order to connect this technology to modern fixtures that use the standard DMX interface, I naturally need the help of a company that does this professionally, and for this design option, of course, I need a mechanism for configuring lights.

Now I want to immediately touch on the disadvantages and advantages of this approach.

The advantage is, the types of LEDs I use are widely available, found on PCB assembly lines, and low cost. When ordering the manufacture of printed circuit boards, it is very convenient to order their assembly immediately, which greatly simplifies and reduces the cost of the entire development process.

Disadvantages - These LEDs have a single wire interface and are very slow. Yes, it is faster than DMX, but not fast enough to construct large visualization panels. Secondly, the depth of brightness per color is only 8 bits, which is very, very small for normal color reproduction. Therefore, you have to go to the simplification of the light range. Looking ahead, now there are faster interfaces like SPI for LEDs, with built-in drivers that allow you to work at speeds up to 40 MHz and this is already a solution to the problem of low data transfer rates. And there are already LEDs with 16 bit brightness per color. But here everything starts to rest on the cost of the MCU and the cost of peripherals for data transfer.

I will explain to understand why 16 bits is better than 8 bits of brightness. Imagine that you are in a cinema, the movie starts and the brightness of the lighting decreases and you see that the light is getting dimmer and dimmer and dimmer until it goes out completely. So such lighting on 8-bit LEDs cannot be done. When a command is given to change the brightness from 0 to brightness 1, a huge jump in illumination will be observed. But on 16-bit LEDs, this will be easy. But if for processing 8-bit brightness the computing core can cope with a performance of 5-10Mips, then for 16-bit brightness it is necessary at least 16 times more productive!

I had to make many compromises in development, but the decisive factor was still the option of the minimum cost.

When the visualization data is prepared, they must be sent to the output optical device (OOD), for this a mechanism was invented, called visualization matrices, the main task of which is to control the symmetry and diversity of the images created.

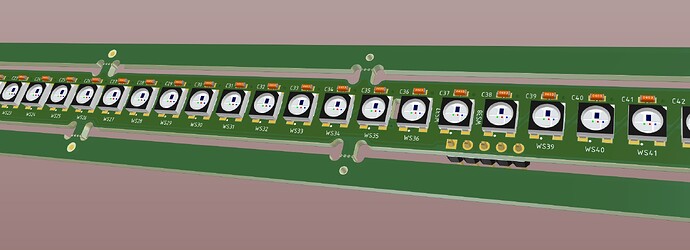

The overall dimensions of the prototypes for the M68 and M100 were due to the technical capabilities of the printed circuit board manufacturers and customs restrictions, which allow the introduction of goods without additional taxation up to a certain value. That is, the fewer printed circuit boards in the device, the cheaper it is. The number of printed circuit boards that contain devices for M68 is two printed circuit boards, for M100 - one printed circuit board, LEDs with side glow were used in it.

Functionally, projects like CLUBBEST look like this:

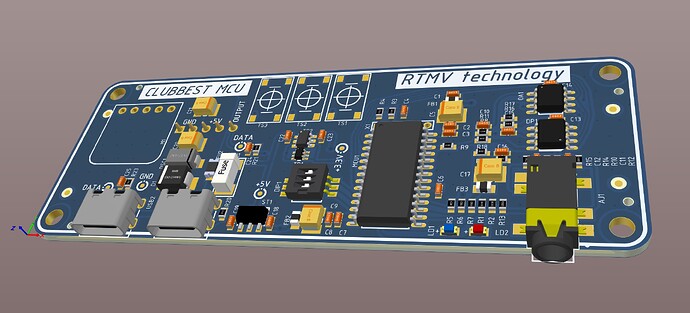

Technically, everything is very simple. All the main work is done by the microcontroller.

Where did the numbers 68 or 100 come from - this is the number of light sources used in the project.

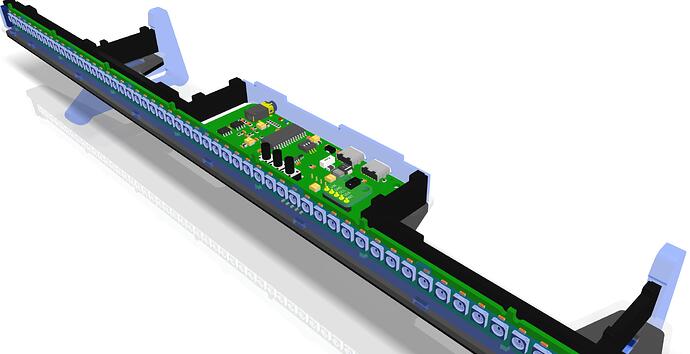

Circuitry and PCB design is one side of the issue, the other is case design. The project itself was implemented two years ago, at that time printing 3D cases even of this size was problematic, but today in China there are a sufficient number of factories performing 3D printing, and the price of 3D printing has dropped significantly, so I think to implement new options for the case with 3D printing.

The cases for CLUBBEST were made of laser-cut acrylic. There were many options, but the final one was this.

in the case, one printed circuit board is the processor for processing and converting the sound stream itself, and the second is the LED display.

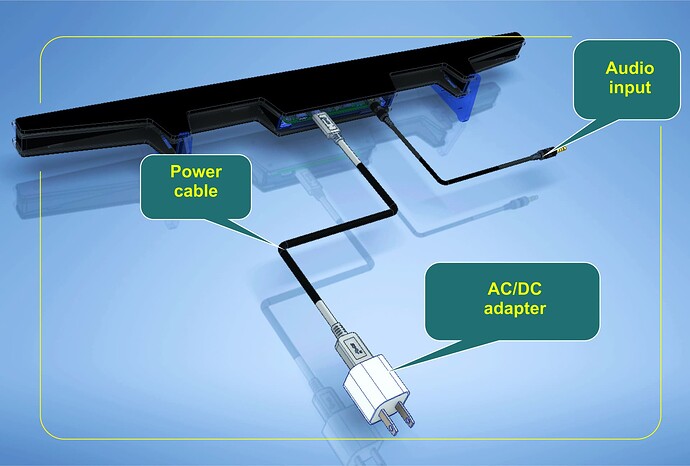

For audio input, a 3.5mm audio connector is used, power is supplied via USB Type-C, the second USB connector is for connecting a repeater. The repeater has exactly the same appearance as the main unit, but it only has an adapter board and an LED board.

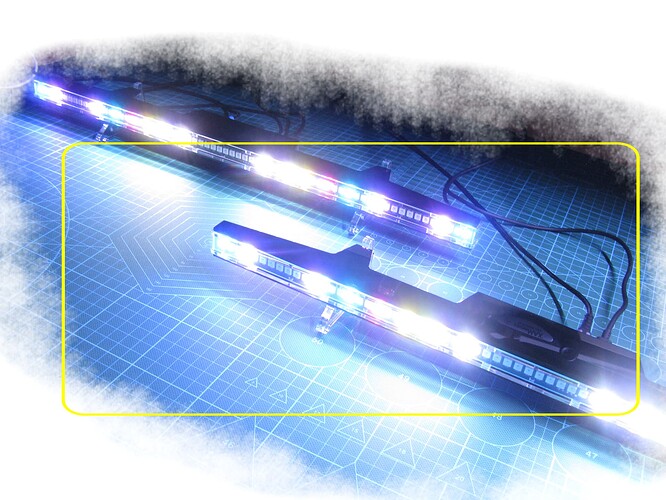

This is what the real connection looks like, audio and power cable, for CLUBBEST M 68. I think that the solution turned out to be as simple and convenient as possible.

Here you can download a 3D-pdf in which you can independently examine and disassemble the CLUBBEST M68 case into spare parts.

sborka_v6-osnovnoju2.pdf (2.0 MB)

To be continued…

And this is another video…